If you’ve followed the world of diesel trucks for the past decade, you’re no doubt aware of the drama surrounding aftermarket tuners and defeat devices used to skirt emissions requirements. For years, the Environmental Protection Agency and the Department of Justice have gone after manufacturers, distributors, and importers of these devices, as well as individuals who use them on their trucks, for violating the Clean Air Act.

Historically, the DoJ has gone after perpetrators by pursuing civil penalties in the form of fines. But in some cases, the agency has also pursued criminal penalties that include higher fines, probation, or actual jail time. The past few years have seen a handful of high-profile cases in which diesel tuners have been sent to prison for designing, installing, or selling defeat devices, in addition to having to pay civil penalties.

That policy is apparently coming to an end. The Department of Justice announced today it plans to stop pursuing criminal charges for these crimes. Here’s what that means.

Civil Charges Over Criminal Charges

The DoJ announced yesterday afternoon on X that it will no longer pursue criminal charges related to the Clean Air Act when the allegations involve tampering with onboard vehicle devices.

Today, @TheJusticeDept is exercising its enforcement discretion to no longer pursue criminal charges under the Clean Air Act based on allegations of tampering with onboard diagnostic devices in motor vehicles.

— DOJ Environment and Natural Resources Division (@DOJEnvironment) January 21, 2026

In a follow-up post, the DoJ said it was “committed to sound enforcement principles, efficient use of government resources, and avoiding overcriminalization of federal environmental law.” The DoJ also clarified that it would still pursue civil penalties “when appropriate.”

A DoJ memo obtained by CBS News ordered federal prosecutors to stop pursuing criminal cases against those selling, distributing, or manufacturing defeat devices.

The edict, issued by Deputy Attorney General Todd Blanche, marks the first time that the Justice Department has formally taken steps to scale back environmental criminal enforcement since President Trump took office in January 2025.

In the memo, Blanche wrote that he was taking this step “to ensure consistent and fair prosecution under the law, as well as to ensure the best use of Department resources,” according to a copy reviewed by CBS News.

The decision means that violators can no longer be subject to jail time, but it doesn’t mean they’re totally off the hook. The Clean Air Act is still enforceable by the EPA, and civil penalties are still applicable. That means theoretically, Cummins would’ve still had to pay for its near-$1.7-billion civil fine for installing emissions-cheating devices on engines found in Ram 2500 and 3500 pickups.

As for why the DoJ made this change, CBS claims the push was made by a guy named Adam Gustafson, an assistant attorney general appointed in February.

The push to kill all of the pending defeat device cases was championed by Adam Gustafson, the principal deputy assistant attorney general for the Justice Department’s Environment and Natural Resources Division who previously worked for Boeing and at the EPA, according to two of those sources and government records seen by CBS News.

He has not specialized in the practice of criminal environmental law.

Although Gustafson has previously signed off on at least some of the pending indictments involving after-market defeat devices, a new and novel defense bar argument that surfaced over the summer later changed his mind, the sources said.

That argument, according to CBS, came from the owners of Racing Performance Maintenance Northwest, a shop in Washington state. The two owners were convicted last year of conspiring to violate the Clean Air Act after pleading guilty to tampering with a monitoring device, and each was fined $10,000 and sentenced to three years of probation. They later appealed the conviction using a theory that Gustafson posited as worthwhile.

Her attorneys put forth a legal theory alleging that she cannot be held criminally liable because the software associated with emission controls, known as “onboard diagnostic systems,” is not “required to be maintained” under the Clean Air Act.

For this reason, they claimed that such an offense can only be charged as a civil violation, not a criminal one.

Whether you agree with that argument will depend on a lot of things, but for what it’s worth, it sounds like the folks at the EPA have a different opinion. From CBS:

An internal EPA memo reviewed by CBS News shows that career attorneys disagree with the arguments made by defense lawyers in the 9th Circuit case. The memo argues that there are “multiple respects” in which diesel truck emissions software systems are “required to be maintained” under the law, and therefore tampering with them can be a crime.

“When Congress enacted the Clean Air Act, legislators sought to ensure that regulated motor vehicles/engines would meet applicable emission standards not only at the time of manufacture and initial sale, but thereafter in everyday use,” the memo says.

Although the 9th Circuit has not yet ruled on the matter, the legal theory resonated with Gustafson, who started raising questions about the pending cases, one of the sources said.

How The Clean Air Act Has Been Enforced Up Until Now

The Clean Air Act is a wide-ranging law, but in the case of vehicle emissions cheating, it outlaws the manufacturing, selling, or installing of a defeat device, which is “a part for a motor vehicle that bypasses, defeats, or renders inoperative any emission control device,” according to the EPA. The Act also prohibits anyone “from tampering with an emission control device on a motor vehicle by removing it or making it inoperable prior to or after the sale or delivery to the buyer.” Violators are subject to civil penalties “up to $45,268 per noncompliant vehicle or engine, $4,527 per tampering event or sale of defeat device, and $45,268 per day for reporting and record keeping violations,” according to the EPA.

There have been numerous criminal cases brought by the Justice Department based on the Clean Air Act. The most high-profile case is, of course, Volkswagen’s “Dieselgate” scandal, in which researchers discovered the company had installed defeat devices to bypass emissions regulations in secret on around 11 million cars worldwide. More recently, Hino Motors, a subsidiary of Toyota, pleaded guilty in March 2025 to a multi-year emissions fraud scheme involving its diesel engines. No one went to jail, but a judge sentenced Hino to serve five years of probation, where it won’t be able to import diesel engines into the U.S., according to Reuters.

It’s not just OEMs that were subject to criminal prosecution. The DoJ routinely pursued cases of aftermarket defeat device manufacturers, distributors, and installers. In February 2025, an Indiana man was sentenced to four months in prison and given a $25,000 fine after pleading guilty to conspiring to violate the Clean Air Act by tampering with monitoring devices on “hundreds” of vehicles, grossing him $4.3 million in earnings from 2019 and 2021, according to the DoJ.

Back in December 2024, Troy Lake Sr., the owner of the Colorado-based Elite Diesel Service Inc., pleaded guilty to disabling onboard diagnostic systems on at least 344 heavy-duty commercial trucks. He was ordered to pay fines totaling $52,200 and sentenced to one year and one day in federal prison. Lake Sr. served seven months in jail before being released to house arrest to serve out the remainder of his sentence, but was pardoned by President Trump in November 2025.

Trump’s pardon of Lake Sr. came at the behest of Republican Senator Cynthia Lummis of Wyoming, who said in a statement that the case was “yet another example of how federal agencies have been weaponized by Democrat administrations against hardworking Americans.”

This move also follows a year of the administration rolling back environmental protection policies aimed at reducing emissions, most notably starting the process to ease fuel economy requirements for new cars and eliminating fuel economy penalties handed out to automakers over the past three years — with the administration’s stated goal being to reduce vehicle costs to the consumer and to help the auto industry. It’s entirely plausible that this move to end criminal prosecutions for defeat device installers and manufacturers is another step in that direction, rather than purely due to different interpretations of the law.

Why You Should Care

There are two sides to this dispute, both with fairly reasonable arguments. On the one hand, people who own their trucks should be able to modify them how they’d like—it’s their property after all, that they paid for with their own money. What they do with their property shouldn’t be anyone’s business but their own. If they want to add things like wider tires, aftermarket intakes, shorter gearing, or different software after the truck has left the factory, they should be able to. This is, in a nutshell, the thought process the DoJ is using to pivot away from criminal prosecutions with regard to emissions tampering.

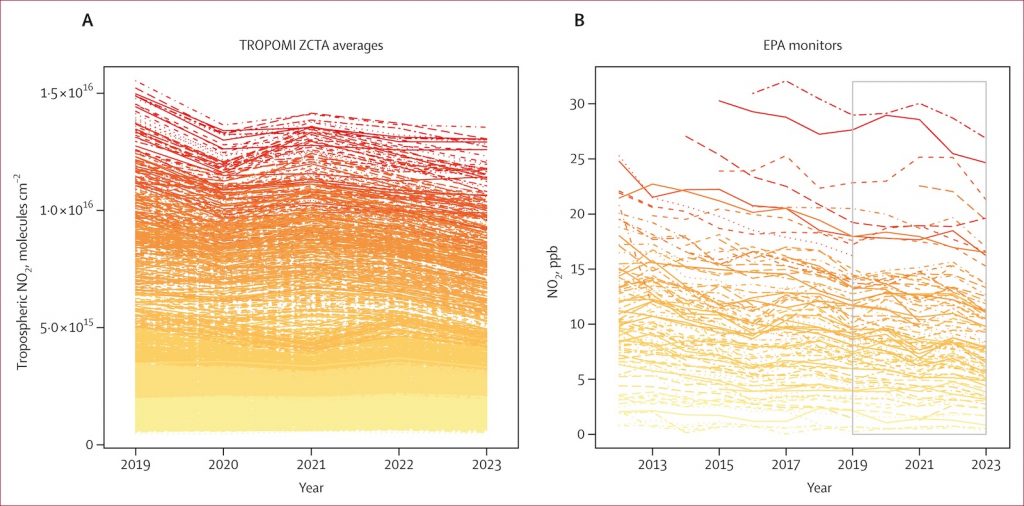

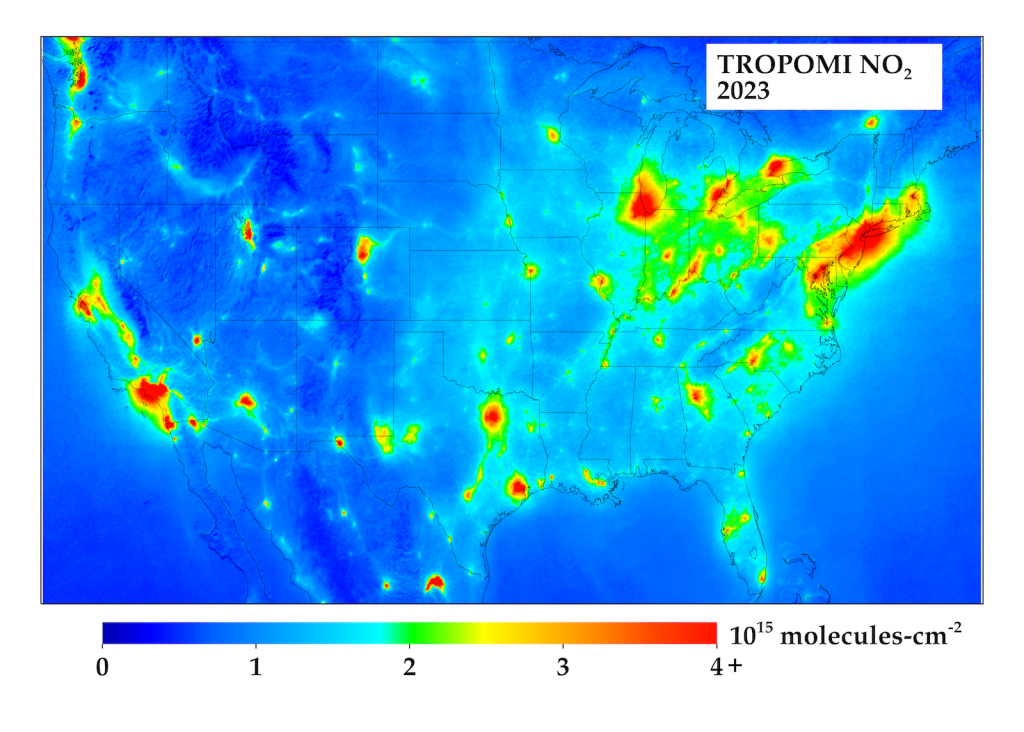

On the other hand, diesel trucks with defeat devices can be terrible for the air we breathe. A study released by the EPA in 2020 found that more than 550,000 trucks in the decade leading up to the study had their emissions controls tampered with or removed; the results were not good. From the study:

As a result of this tampering, more than 570,000 tons of excess oxides of nitrogen(NOx) and 5,000 tons of particulate matter (PM) will be emitted by these tampered trucks over the lifetime of the vehicles. These tampered trucks constitute approximately 15 percent of the national population of diesel trucks that were originally certified with emissions controls. But, due to their severe excess NOx emissions, these trucks have an air quality impact equivalent to adding more than 9 million additional (compliant, non- tampered) diesel pickup trucks to our roads.

This is also far worse than anything seen from Volkswagen’s folly, according to the guy in charge of the firm that uncovered the Dieselgate scandal. From The New York Times:

In terms of the pollution impact in the United States, “This is far more alarming and widespread than the Volkswagen scandal,” said Drew Kodjak, executive director of the International Council on Clean Transportation, the research group that first alerted the E.P.A. of the illegal Volkswagen technology. “Because these are trucks, the amount of pollution is far, far higher,” he said.

These emissions have real consequences. Nitrogen dioxide and the 5,000 extra tons of industrial soot emitted by these cheating trucks are linked to lung damage and aggravate existing respiratory diseases such as asthma, according to the EPA. Data released by the agency in October suggests that particulate matter causes 15,000 premature deaths every year.

No matter the underlying reason, going forward, the consequences for tuning your diesel truck to roll coal (as an example — there’s other tuning done for drivability/durability reasons) will be a little less dire. Not that I recommend doing it.

Top graphic image: DepositPhotos.com, Apple

The post The U.S. Will No Longer Criminally Charge People Who Emissions-Delete Diesel Trucks appeared first on The Autopian.

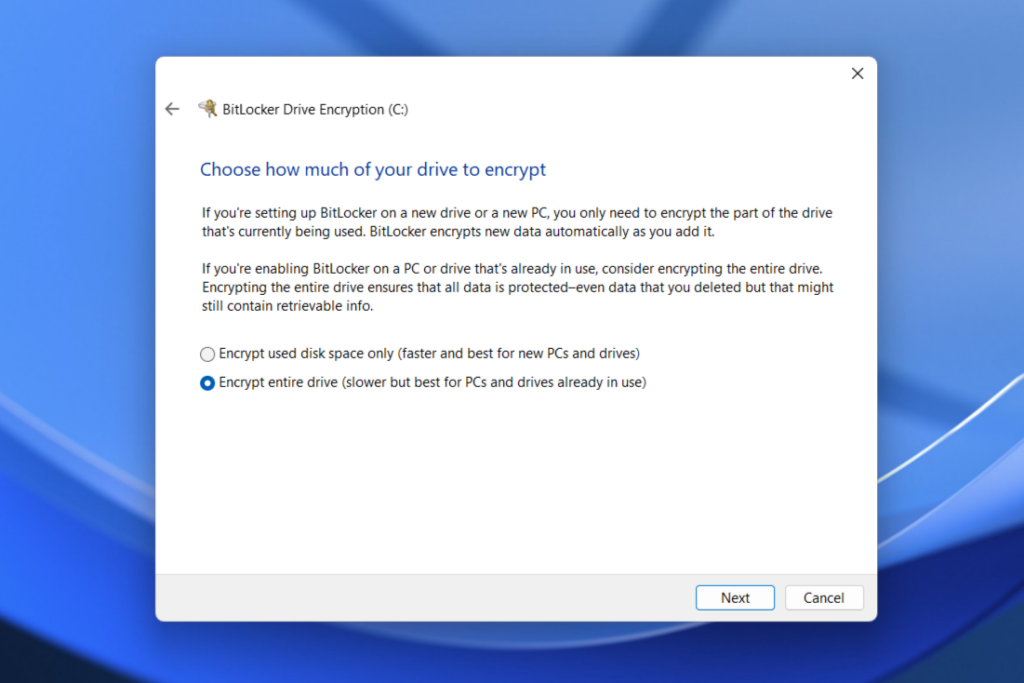

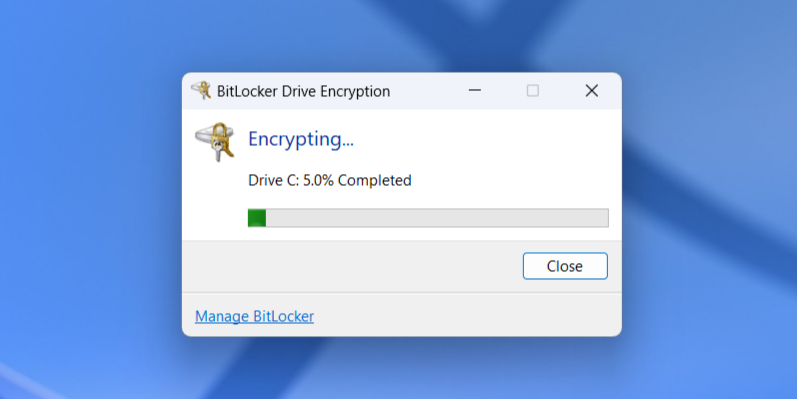

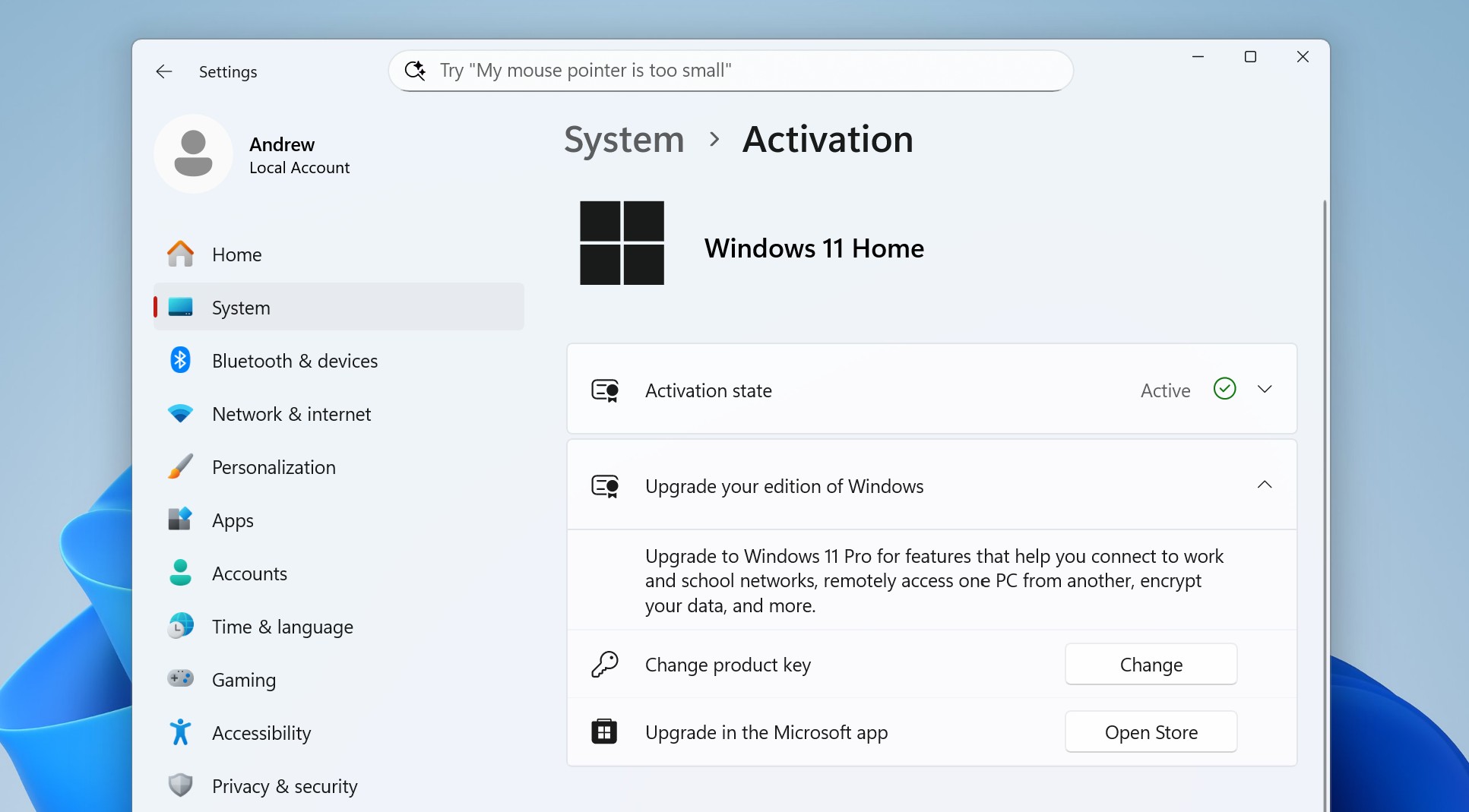

Settings > System > Activation will tell you what edition of Windows 11 you have and offer some options for upgrades.

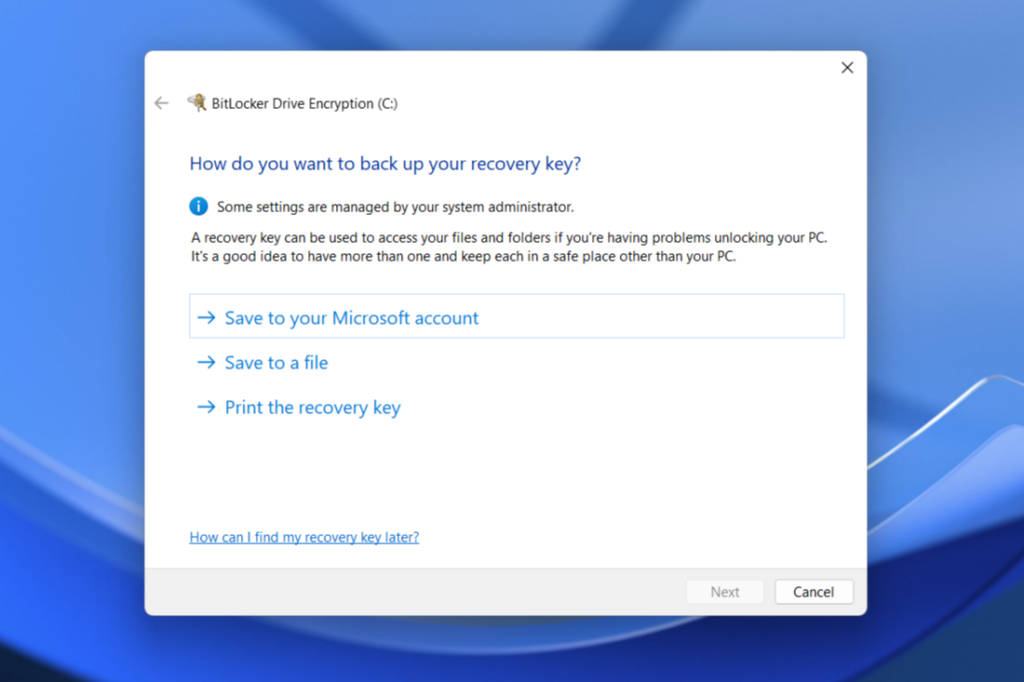

Credit:

Andrew Cunningham

Settings > System > Activation will tell you what edition of Windows 11 you have and offer some options for upgrades.

Credit:

Andrew Cunningham

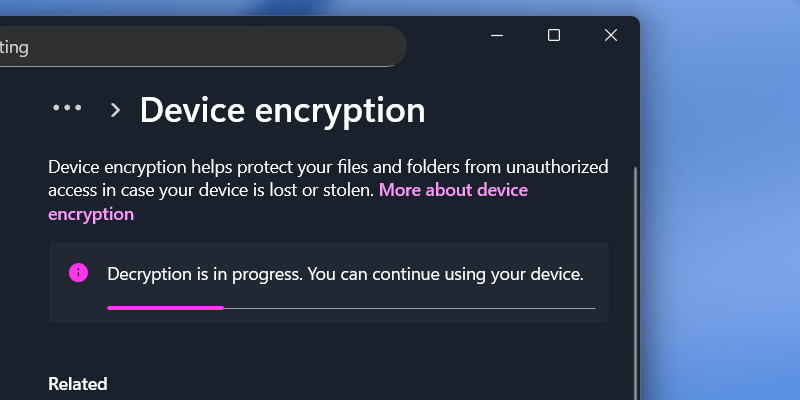

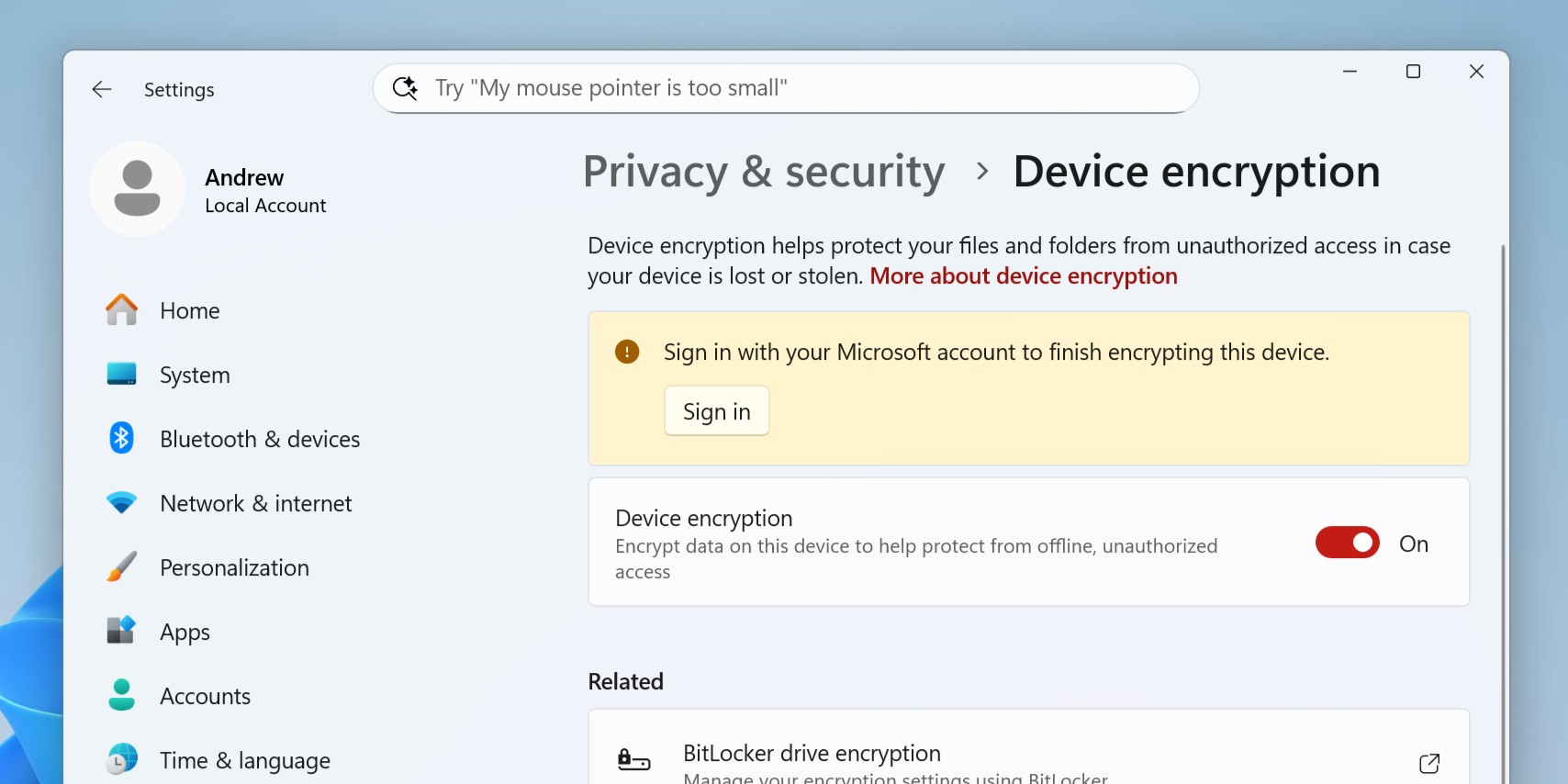

If you haven't signed in with a Microsoft account, you won't have a key saved to Microsoft's servers, and you can skip the decryption step.

Credit:

Andrew Cunningham

If you haven't signed in with a Microsoft account, you won't have a key saved to Microsoft's servers, and you can skip the decryption step.

Credit:

Andrew Cunningham